2026

From Disruption to Immersion: Reimagining Vehicle Motion as Environmental Feedback through Force Mappings in In-Car VR

Bocheon Gim, Seongjun Kang, Gwangbin Kim, Dohyeon Yeo, Yumin Kang, Ahmed Elsharkawy, Seungjun Kim

CHI'26: Proceedings of the CHI Conference on Human Factors in Computing Systems 2026 (COND. ACCEPTED) 2026 1st Author

We present a novel approach to in-car virtual reality (VR) that reimagines vehicle-generated forces not as artifacts to suppress, but as immersive feedback cues for enhancing presence and interaction. We introduce the concept of force mappings, a design space that translates vehicle-induced forces such as acceleration, turns, and road texture into ambient environmental representations within VR environments. Implemented on a real vehicle platform with a sensor-based pipeline, our system applies four representative mapping strategies (Ground-based, Wind-based, Current-based, Object-based) and evaluates their perceptual coherence and experiential effects through two respective user studies. Results show that force mappings can improve presence, comfort, and engagement while enabling creative reinterpretations of physical motion. Finally. we provide empirical findings and design guidelines that position vehicle motion as a generative medium for multisensory in-car VR applications.

From Disruption to Immersion: Reimagining Vehicle Motion as Environmental Feedback through Force Mappings in In-Car VR

Bocheon Gim, Seongjun Kang, Gwangbin Kim, Dohyeon Yeo, Yumin Kang, Ahmed Elsharkawy, Seungjun Kim

CHI'26: Proceedings of the CHI Conference on Human Factors in Computing Systems 2026(COND. ACCEPTED) 2026 1st Author

We present a novel approach to in-car virtual reality (VR) that reimagines vehicle-generated forces not as artifacts to suppress, but as immersive feedback cues for enhancing presence and interaction. We introduce the concept of force mappings, a design space that translates vehicle-induced forces such as acceleration, turns, and road texture into ambient environmental representations within VR environments. Implemented on a real vehicle platform with a sensor-based pipeline, our system applies four representative mapping strategies (Ground-based, Wind-based, Current-based, Object-based) and evaluates their perceptual coherence and experiential effects through two respective user studies. Results show that force mappings can improve presence, comfort, and engagement while enabling creative reinterpretations of physical motion. Finally. we provide empirical findings and design guidelines that position vehicle motion as a generative medium for multisensory in-car VR applications.

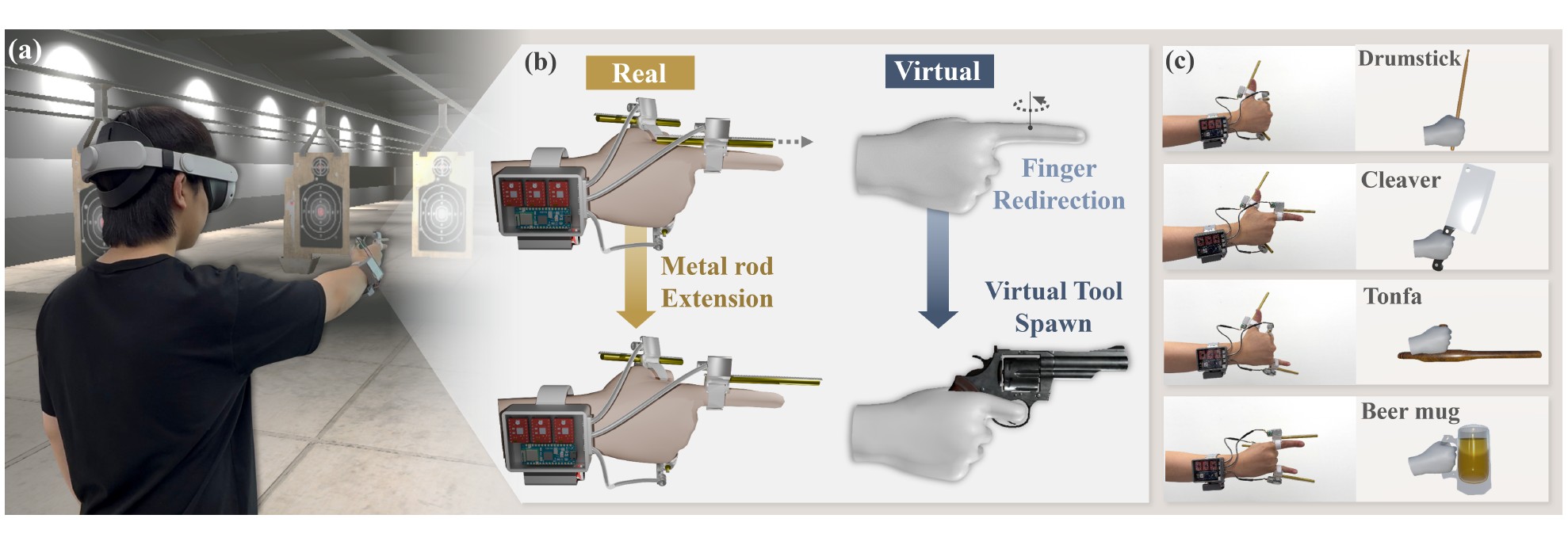

When Fingers Become Tools: Rendering Virtual Tool Inertia with a Finger-Mounted Extending Rod

Seongjun Kang, Gwangbin Kim, Bocheon Gim, Jeongju Park, Juwon Um, Semoo Shin, Chanyoung Park, Seungjun Kim

CHI'26: Proceedings of the CHI Conference on Human Factors in Computing Systems 2026 (COND. ACCEPTED) 2026

This paper presents the Finger-Mounted Extending Rod, a wearable device that transforms fingers into virtual tools by modulating fingertip mass distribution. The system employs linear actuators on finger that extend or retract metal rods according to finger poses, generating rotational inertia while redirecting the hand to natural grip postures. Through three user studies, we evaluate (1) finger pose embodiment under visual redirection and tool matching via inertia tensor similarity, (2) perception of tool length and rotational inertia, and (3) tool identification accuracy and user experience. Results show that 10 of 15 poses maintained embodiment, with inertia tensor similarities of 0.936–0.991 for tool-pose pairs. Users perceived inertia with 4.19–10.45× amplification and achieved 52.9% identification accuracy, exceeding chance level (16.7%). The Inertia-aligned condition enhanced immersion, realism, and enjoyment compared to Inertia-misaligned and No-Finger Rod conditions across six VR scenarios. We conclude by discussing how the system rendering virtual tools without handheld controllers.

When Fingers Become Tools: Rendering Virtual Tool Inertia with a Finger-Mounted Extending Rod

Seongjun Kang, Gwangbin Kim, Bocheon Gim, Jeongju Park, Juwon Um, Semoo Shin, Chanyoung Park, Seungjun Kim

CHI'26: Proceedings of the CHI Conference on Human Factors in Computing Systems 2026(COND. ACCEPTED) 2026

This paper presents the Finger-Mounted Extending Rod, a wearable device that transforms fingers into virtual tools by modulating fingertip mass distribution. The system employs linear actuators on finger that extend or retract metal rods according to finger poses, generating rotational inertia while redirecting the hand to natural grip postures. Through three user studies, we evaluate (1) finger pose embodiment under visual redirection and tool matching via inertia tensor similarity, (2) perception of tool length and rotational inertia, and (3) tool identification accuracy and user experience. Results show that 10 of 15 poses maintained embodiment, with inertia tensor similarities of 0.936–0.991 for tool-pose pairs. Users perceived inertia with 4.19–10.45× amplification and achieved 52.9% identification accuracy, exceeding chance level (16.7%). The Inertia-aligned condition enhanced immersion, realism, and enjoyment compared to Inertia-misaligned and No-Finger Rod conditions across six VR scenarios. We conclude by discussing how the system rendering virtual tools without handheld controllers.

2025

Demonstration of Multisensory In-Car VR: Repurposing the Vehicle’s HVAC System and Power Seat for Immersive Haptic Feedback

Dohyeon Yeo, Gwangbin Kim, Minwoo Oh, Jeongju Park, Bocheon Gim, Seongjun Kang, Ahmed Elsharkawy, Seungjun Kim

ISMAR '25 Adjunct: IEEE International Symposium on Mixed and Augmented Reality Adjunct (COND. ACCEPTED) 2025 🏆Best Demo

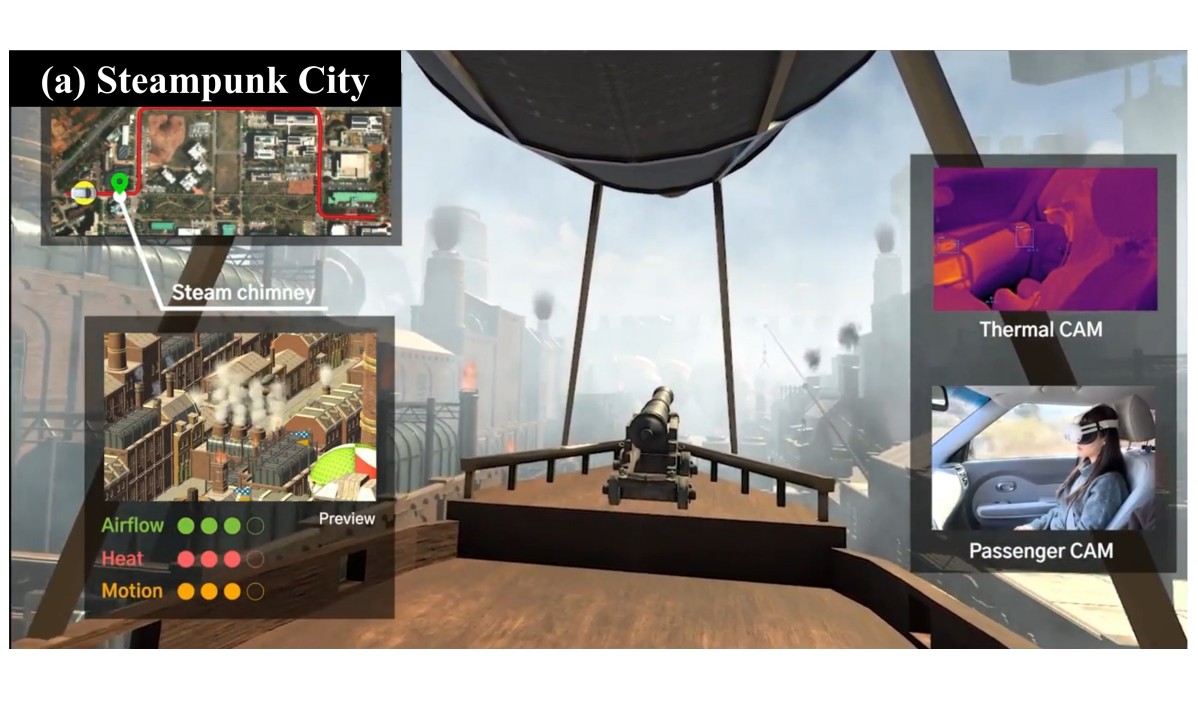

We demonstrate a novel in-car Virtual Reality (VR) platform that provides multisensory feedback without requiring external hardware. Our system leverages the vehicle's Heating, Ventilation, and Air Conditioning (HVAC) and power seat systems to generate synchronized thermal, airflow, and motion feedback. These physical sensations are designed to operate in coordination with the visual experience, enhancing a passenger's sense of presence while reducing the potential for motion sickness. This demonstration shows how existing automotive components can be transformed into an effective and scalable platform for immersive entertainment.

Demonstration of Multisensory In-Car VR: Repurposing the Vehicle’s HVAC System and Power Seat for Immersive Haptic Feedback

Dohyeon Yeo, Gwangbin Kim, Minwoo Oh, Jeongju Park, Bocheon Gim, Seongjun Kang, Ahmed Elsharkawy, Seungjun Kim

ISMAR '25 Adjunct: IEEE International Symposium on Mixed and Augmented Reality Adjunct(COND. ACCEPTED) 2025 🏆Best Demo

We demonstrate a novel in-car Virtual Reality (VR) platform that provides multisensory feedback without requiring external hardware. Our system leverages the vehicle's Heating, Ventilation, and Air Conditioning (HVAC) and power seat systems to generate synchronized thermal, airflow, and motion feedback. These physical sensations are designed to operate in coordination with the visual experience, enhancing a passenger's sense of presence while reducing the potential for motion sickness. This demonstration shows how existing automotive components can be transformed into an effective and scalable platform for immersive entertainment.

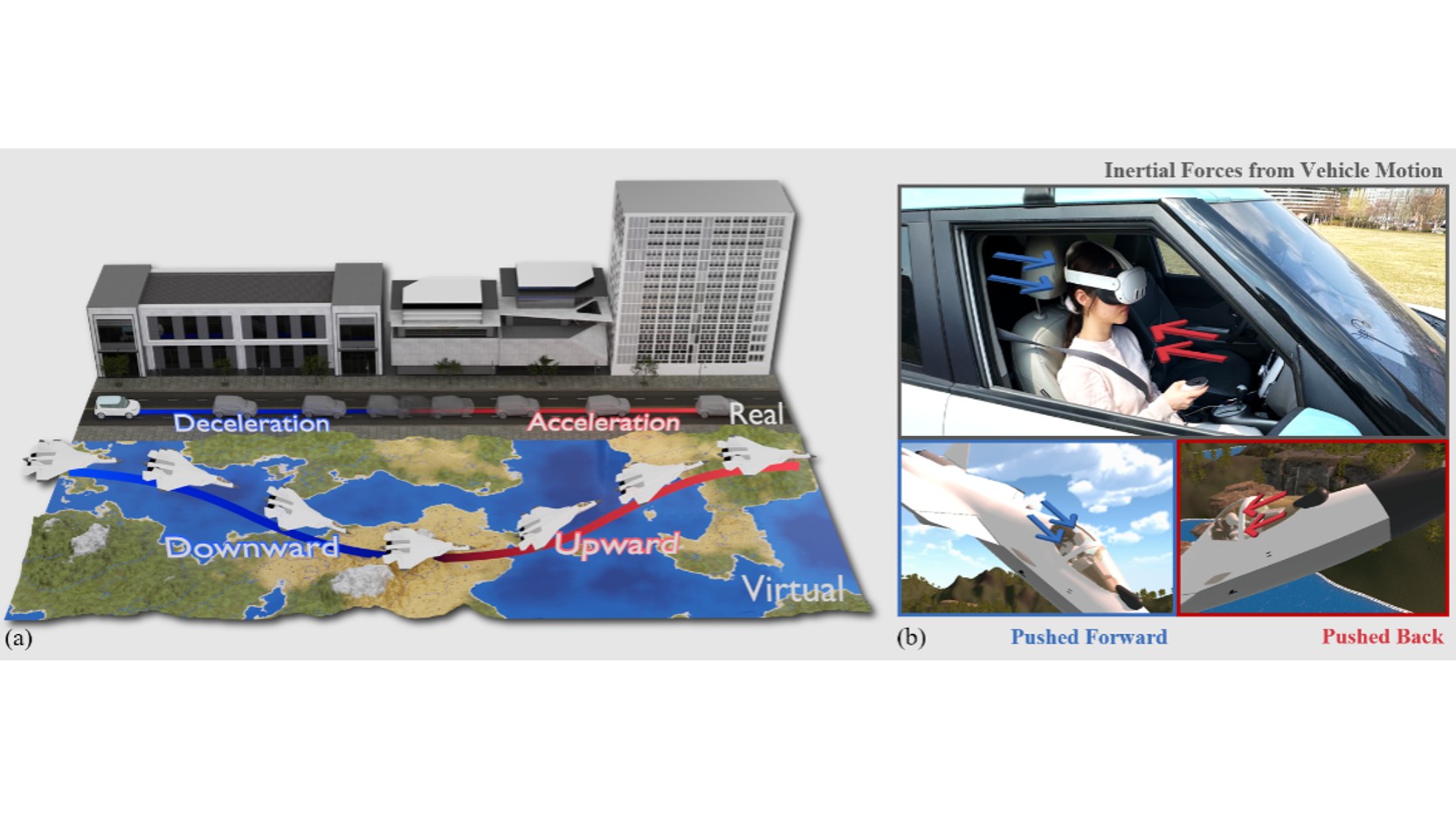

Defying Gravity: Towards Gravitoinertial Retargeting of Acceleration for Virtual Vertical Motion in In-Car VR

Bocheon Gim, Seongjun Kang, Dohyeon Yeo, Gwangbin Kim, Juwon Um, Jeongju Park, Seungjun Kim

ISMAR 2025: IEEE International Symposium on Mixed and Augmented Reality (COND. ACCEPTED) 2025 1st Author

In-car VR applications typically synchronize virtual motion with real vehicle movement to minimize visual-vestibular mismatch. However, this approach limits virtual movement to directions in which the vehicle can physically move, typically restricting the experience to horizontal motion. This study introduces a method to expand the range of virtual motion by simulating vertical movement, leveraging vehicle acceleration to induce a vertical pitch illusion via manipulation of gravitoinertial perception. We conducted a two-phase study evaluating (1) optimal vertical gain values for maximizing perceptual realism in a controlled environment and (2) user experience factors such as motion sickness and presence in an on-road VR flight simulation under realistic driving conditions. Our findings show that users tend to prefer vertical gains that exceed theoretically valid mappings, and highlight the importance of aligning virtual motion with perceived inertial cues to enhance the realism and coherence of vertical motion in in-car VR applications.

Defying Gravity: Towards Gravitoinertial Retargeting of Acceleration for Virtual Vertical Motion in In-Car VR

Bocheon Gim, Seongjun Kang, Dohyeon Yeo, Gwangbin Kim, Juwon Um, Jeongju Park, Seungjun Kim

ISMAR 2025: IEEE International Symposium on Mixed and Augmented Reality(COND. ACCEPTED) 2025 1st Author

In-car VR applications typically synchronize virtual motion with real vehicle movement to minimize visual-vestibular mismatch. However, this approach limits virtual movement to directions in which the vehicle can physically move, typically restricting the experience to horizontal motion. This study introduces a method to expand the range of virtual motion by simulating vertical movement, leveraging vehicle acceleration to induce a vertical pitch illusion via manipulation of gravitoinertial perception. We conducted a two-phase study evaluating (1) optimal vertical gain values for maximizing perceptual realism in a controlled environment and (2) user experience factors such as motion sickness and presence in an on-road VR flight simulation under realistic driving conditions. Our findings show that users tend to prefer vertical gains that exceed theoretically valid mappings, and highlight the importance of aligning virtual motion with perceived inertial cues to enhance the realism and coherence of vertical motion in in-car VR applications.

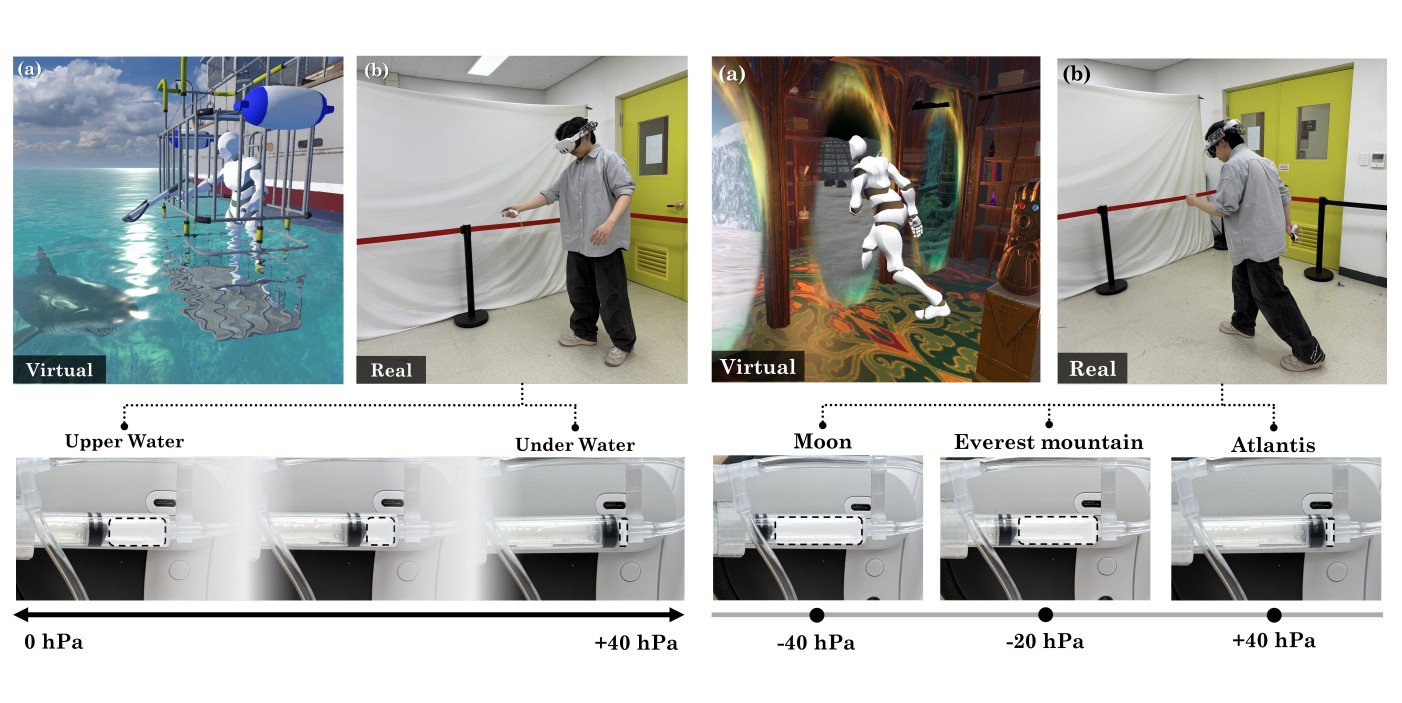

Demonstration of EarPressure VR: Ear Canal Pressure Feedback for Enhancing Environmental Presence in Virtual Reality

Seongjun Kang, Gwangbin Kim, Bocheon Gim, Jeongju Park, Semoo Shin, Seungjun Kim

UIST '25 Adjunct: Adjunct Proceedings of the ACM Symposium on User Interface Software and Technology 2025

We demonstrate EarPressure VR, a novel haptic system that enhances environmental presence in virtual reality by simulating atmospheric pressure changes through controlled air pressure modulation in the user's ear canal. The system uses a VR headset with sealed earbuds and a motor-driven syringe to safely generate pressure variations that simulate sensations like underwater depth or high-altitude environments (within ±40hPa of the ambient level). Attendees will experience two interactive scenarios: a gradual underwater descent, feeling a steady increase in inward pressure synchronized with depth; and instantaneous portal travel between high- and low-pressure worlds, feeling sudden and distinct environmental shifts. This demonstration showcases how leveraging the ear canal as a haptic channel can create more physically grounded and immersive virtual experiences.

Demonstration of EarPressure VR: Ear Canal Pressure Feedback for Enhancing Environmental Presence in Virtual Reality

Seongjun Kang, Gwangbin Kim, Bocheon Gim, Jeongju Park, Semoo Shin, Seungjun Kim

UIST '25 Adjunct: Adjunct Proceedings of the ACM Symposium on User Interface Software and Technology 2025

We demonstrate EarPressure VR, a novel haptic system that enhances environmental presence in virtual reality by simulating atmospheric pressure changes through controlled air pressure modulation in the user's ear canal. The system uses a VR headset with sealed earbuds and a motor-driven syringe to safely generate pressure variations that simulate sensations like underwater depth or high-altitude environments (within ±40hPa of the ambient level). Attendees will experience two interactive scenarios: a gradual underwater descent, feeling a steady increase in inward pressure synchronized with depth; and instantaneous portal travel between high- and low-pressure worlds, feeling sudden and distinct environmental shifts. This demonstration showcases how leveraging the ear canal as a haptic channel can create more physically grounded and immersive virtual experiences.

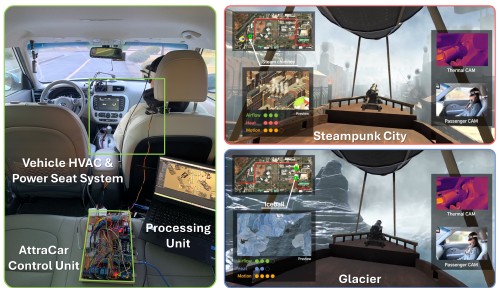

Demonstration of AttraCar: Multisensory In-Car VR with Thermal, Airflow, and Motion Feedback through Built-In Vehicle Systems

Dohyeon Yeo, Gwangbin Kim, Minwoo Oh, Jeongju Park, Bocheon Gim, Seongjun Kang, Ahmed Elsharkawy, Seungjun Kim

UIST '25 Adjunct: Adjunct Proceedings of the ACM Symposium on User Interface Software and Technology 2025 🏆Honourable Mention Jury, 🏆Best Demo People

We introduce AttraCar, a multisensory in-car Virtual Reality (VR) platform that delivers thermal, airflow, and motion feedback using built-in vehicle systems. By controlling the Heating, Ventilation, and Air Conditioning (HVAC) system and the power seat, our platform enables the creation of multisensory, hardware-free in-car VR designed to enhance immersion and mitigate motion sickness. This demonstration allows attendees to experience these effects firsthand, showcasing a practical and scalable approach for future immersive automotive applications.

Demonstration of AttraCar: Multisensory In-Car VR with Thermal, Airflow, and Motion Feedback through Built-In Vehicle Systems

Dohyeon Yeo, Gwangbin Kim, Minwoo Oh, Jeongju Park, Bocheon Gim, Seongjun Kang, Ahmed Elsharkawy, Seungjun Kim

UIST '25 Adjunct: Adjunct Proceedings of the ACM Symposium on User Interface Software and Technology 2025 🏆Honourable Mention Jury, 🏆Best Demo People

We introduce AttraCar, a multisensory in-car Virtual Reality (VR) platform that delivers thermal, airflow, and motion feedback using built-in vehicle systems. By controlling the Heating, Ventilation, and Air Conditioning (HVAC) system and the power seat, our platform enables the creation of multisensory, hardware-free in-car VR designed to enhance immersion and mitigate motion sickness. This demonstration allows attendees to experience these effects firsthand, showcasing a practical and scalable approach for future immersive automotive applications.

EarPressure VR: Ear Canal Pressure Feedback for Enhancing Environmental Presence in Virtual Reality

Seongjun Kang, Gwangbin Kim, Bocheon Gim, Jeongju Park, Semoo Shin, Seungjun Kim

UIST '25: Proceedings of the ACM Symposium on User Interface Software and Technology 2025

This study presents EarPressure VR, a system that modulates ear canal pressure to simulate atmospheric pressure changes in virtual reality (VR). EarPressure VR employs sealed earbuds and a linear stepper motor–driven syringe to generate controlled pressure variations within safe limits (±40 hPa relative to ambient pressure). Through two user studies, we evaluate (1) perceptual thresholds for detecting ear pressure in terms of direction (inward or outward) and intensity differences, and (2) the effect of ear pressure feedback on users’ sense of environmental presence across two VR scenarios involving gradual and discrete changes in ambient pressure. Results show that participants reliably identified pressure direction at thresholds of +14.4 hPa (inward) and –23.8 hPa (outward), and intensity differences at ±14.6% and ±34.9%, respectively. Pressure feedback significantly improved presence ratings, particularly when pressure variation was continuously adjusted to reflect environmental transitions. We conclude by discussing the broader applicability of ear canal pressure feedback in areas such as training, simulation, and everyday experiences.

EarPressure VR: Ear Canal Pressure Feedback for Enhancing Environmental Presence in Virtual Reality

Seongjun Kang, Gwangbin Kim, Bocheon Gim, Jeongju Park, Semoo Shin, Seungjun Kim

UIST '25: Proceedings of the ACM Symposium on User Interface Software and Technology 2025

This study presents EarPressure VR, a system that modulates ear canal pressure to simulate atmospheric pressure changes in virtual reality (VR). EarPressure VR employs sealed earbuds and a linear stepper motor–driven syringe to generate controlled pressure variations within safe limits (±40 hPa relative to ambient pressure). Through two user studies, we evaluate (1) perceptual thresholds for detecting ear pressure in terms of direction (inward or outward) and intensity differences, and (2) the effect of ear pressure feedback on users’ sense of environmental presence across two VR scenarios involving gradual and discrete changes in ambient pressure. Results show that participants reliably identified pressure direction at thresholds of +14.4 hPa (inward) and –23.8 hPa (outward), and intensity differences at ±14.6% and ±34.9%, respectively. Pressure feedback significantly improved presence ratings, particularly when pressure variation was continuously adjusted to reflect environmental transitions. We conclude by discussing the broader applicability of ear canal pressure feedback in areas such as training, simulation, and everyday experiences.

AttraCar: Multisensory In-Car VR with Thermal, Airflow, and Motion Feedback through Built-In Vehicle Systems

Dohyeon Yeo, Gwangbin Kim, Minwoo Oh, Jeongju Park, Bocheon Gim, Seongjun Kang, Ahmed Elsharkawy, Seungjun Kim

UIST '25: Proceedings of the ACM Symposium on User Interface Software and Technology 2025

We introduce AttraCar, a novel multisensory in-car Virtual Reality (VR) platform that delivers thermal, airflow, and motion feedback using built-in vehicle systems. Leveraging the Heating, Ventilation, and Air Conditioning (HVAC) system for airflow and thermal variation, and the power seat for motion feedback, perceptual thresholds were determined through Just Noticeable Difference (JND) experiments. A user study evaluated six feedback conditions (Baseline, Ambient Airflow, Thermal Airflow, Seat Motion, Ambient Airflow + Seat Motion, Thermal Airflow + Seat Motion) during on-road VR scenarios. A subsequent on-road study demonstrates that different combinations of feedback are not only perceptually distinct but also highly effective in a dynamic VR context, significantly mitigating motion sickness and enhancing presence and haptic experience. We conclude with reflections on design considerations, integration challenges, and real-world applicability for scalable multisensory in-car VR systems utilizing existing vehicle components.

AttraCar: Multisensory In-Car VR with Thermal, Airflow, and Motion Feedback through Built-In Vehicle Systems

Dohyeon Yeo, Gwangbin Kim, Minwoo Oh, Jeongju Park, Bocheon Gim, Seongjun Kang, Ahmed Elsharkawy, Seungjun Kim

UIST '25: Proceedings of the ACM Symposium on User Interface Software and Technology 2025

We introduce AttraCar, a novel multisensory in-car Virtual Reality (VR) platform that delivers thermal, airflow, and motion feedback using built-in vehicle systems. Leveraging the Heating, Ventilation, and Air Conditioning (HVAC) system for airflow and thermal variation, and the power seat for motion feedback, perceptual thresholds were determined through Just Noticeable Difference (JND) experiments. A user study evaluated six feedback conditions (Baseline, Ambient Airflow, Thermal Airflow, Seat Motion, Ambient Airflow + Seat Motion, Thermal Airflow + Seat Motion) during on-road VR scenarios. A subsequent on-road study demonstrates that different combinations of feedback are not only perceptually distinct but also highly effective in a dynamic VR context, significantly mitigating motion sickness and enhancing presence and haptic experience. We conclude with reflections on design considerations, integration challenges, and real-world applicability for scalable multisensory in-car VR systems utilizing existing vehicle components.

TherMusic: A Valence-Arousal-Based Music Emotion Classifier and Thermal Feedback Headset System

Seongjun Kang, Bocheon Gim, Juwon Um, Seungjun Kim

KIISE Korea Computer Congress 2025 🏆Best Paper Award

This study proposes a wearable system that delivers a synesthetic user experience by analyzing the user's emotional state in real time while listening to music and providing corresponding thermal stimuli. The proposed system estimates the emotional content of music based on the Arousal–Valence model and maps this emotional information to temperature stimuli delivered through a Peltier-based thermal feedback device. This research quantifies the systematic relationship between musical emotions and physical thermal stimuli and extends this relationship into an affective interface grounded in emotion–temperature correlations. Ultimately, the study contributes to the design of emotion-centered immersive interaction systems.

TherMusic: A Valence-Arousal-Based Music Emotion Classifier and Thermal Feedback Headset System

Seongjun Kang, Bocheon Gim, Juwon Um, Seungjun Kim

KIISE Korea Computer Congress 2025 🏆Best Paper Award

This study proposes a wearable system that delivers a synesthetic user experience by analyzing the user's emotional state in real time while listening to music and providing corresponding thermal stimuli. The proposed system estimates the emotional content of music based on the Arousal–Valence model and maps this emotional information to temperature stimuli delivered through a Peltier-based thermal feedback device. This research quantifies the systematic relationship between musical emotions and physical thermal stimuli and extends this relationship into an affective interface grounded in emotion–temperature correlations. Ultimately, the study contributes to the design of emotion-centered immersive interaction systems.

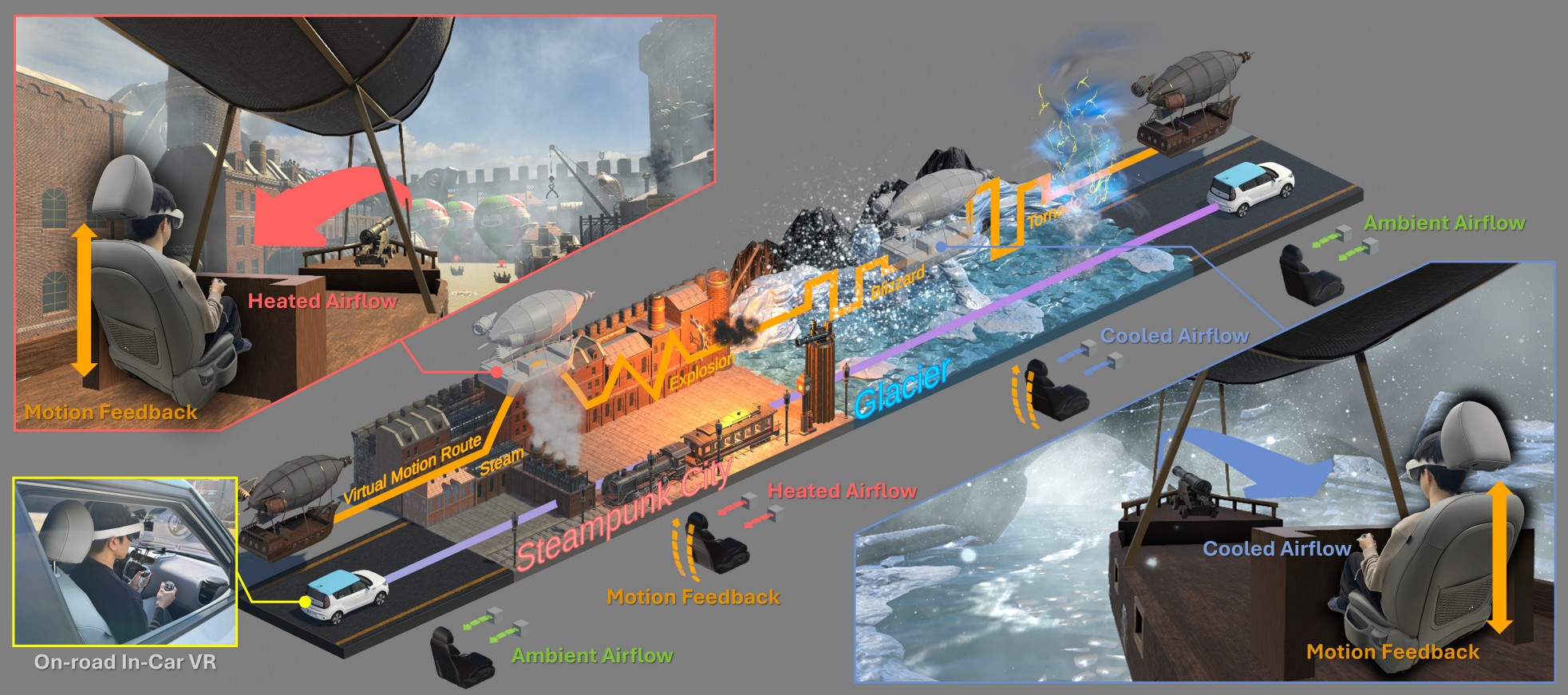

Utilizing Real-Time Video Matting to create a Scalable System for Real Hand Visualization and Interaction within Augmented Virtuality

Bocheon Gim, Seongjun Kang, Juwon Um, Seungjun Kim

KIISE Korea Computer Congress 2025 1st Author

This paper proposes an Augmented Virtuality (AV)-based system that enables real-time extraction, visualization, and interaction with a user's actual hands within a virtual environment. To overcome the limitations of conventional 3D model-based hand representations, the system utilizes egocentric RGB video captured by a ZED Mini stereo camera and applies the Robust Video Matting (RVM) model to segment the hand region. The segmented hands are then visualized within a Unity-based virtual environment. Despite its simple hardware configuration and low computational latency, the system achieves high visual fidelity and demonstrates the feasibility of various hand-based interactions by integrating with Oculus hand tracking capabilities.

Utilizing Real-Time Video Matting to create a Scalable System for Real Hand Visualization and Interaction within Augmented Virtuality

Bocheon Gim, Seongjun Kang, Juwon Um, Seungjun Kim

KIISE Korea Computer Congress 2025 1st Author

This paper proposes an Augmented Virtuality (AV)-based system that enables real-time extraction, visualization, and interaction with a user's actual hands within a virtual environment. To overcome the limitations of conventional 3D model-based hand representations, the system utilizes egocentric RGB video captured by a ZED Mini stereo camera and applies the Robust Video Matting (RVM) model to segment the hand region. The segmented hands are then visualized within a Unity-based virtual environment. Despite its simple hardware configuration and low computational latency, the system achieves high visual fidelity and demonstrates the feasibility of various hand-based interactions by integrating with Oculus hand tracking capabilities.

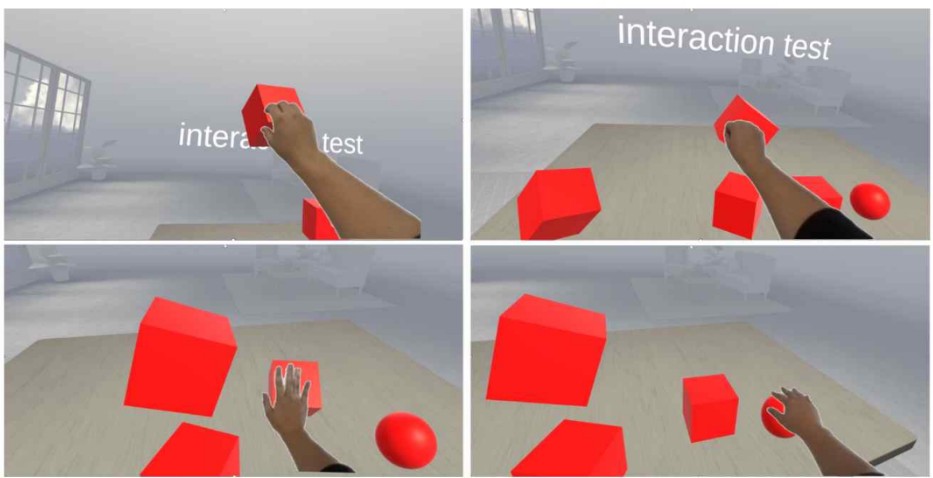

TeleHopper: Simulating a Jumping Sensation as Proprioceptive Feedback for Teleportation in Virtual Reality via Electrical Muscle Stimulation

Juwon Um, Bocheon Gim, Seongjun Kang, Yumin Kang, Eunki Jeon, Seungjun Kim

CHI EA '25: Extended Abstracts of the CHI Conference on Human Factors in Computing Systems 2025

Teleportation, a method of instantly moving users to a target position, has become a widely adopted locomotion method in virtual reality. However, the lack of proprioceptive feedback for teleportation can diminish presence and increase workload, thereby limiting the overall user experience. In this study, we propose TeleHopper, a system that enhances the teleportation experience by simulating the sense of jumping during teleportation through Electrical Muscle Stimulation-based haptic feedback. TeleHopper induces leg movements resembling a jumping motion and adjusts stimulation intensity based on travel distance, creating a realistic proprioceptive perception of leaping through space during teleportation. Experimental results evaluating TeleHopper's user experience showed a significant enhancement in sense of presence, as well as a significant reduction in mental workload. Through this study, we demonstrate TeleHopper's ability to deliver compelling proprioceptive feedback in teleportation, with varying stimulation intensity enhancing realism and aiding travel distance estimation.

TeleHopper: Simulating a Jumping Sensation as Proprioceptive Feedback for Teleportation in Virtual Reality via Electrical Muscle Stimulation

Juwon Um, Bocheon Gim, Seongjun Kang, Yumin Kang, Eunki Jeon, Seungjun Kim

CHI EA '25: Extended Abstracts of the CHI Conference on Human Factors in Computing Systems 2025

Teleportation, a method of instantly moving users to a target position, has become a widely adopted locomotion method in virtual reality. However, the lack of proprioceptive feedback for teleportation can diminish presence and increase workload, thereby limiting the overall user experience. In this study, we propose TeleHopper, a system that enhances the teleportation experience by simulating the sense of jumping during teleportation through Electrical Muscle Stimulation-based haptic feedback. TeleHopper induces leg movements resembling a jumping motion and adjusts stimulation intensity based on travel distance, creating a realistic proprioceptive perception of leaping through space during teleportation. Experimental results evaluating TeleHopper's user experience showed a significant enhancement in sense of presence, as well as a significant reduction in mental workload. Through this study, we demonstrate TeleHopper's ability to deliver compelling proprioceptive feedback in teleportation, with varying stimulation intensity enhancing realism and aiding travel distance estimation.

I Want to Break Free: Enabling User-Applied Active Locomotion in In-Car VR through Contextual Cues

Bocheon Gim, Seokhyun Hwang, Seongjun Kang, Gwangbin Kim, Dohyeon Yeo, Seungjun Kim

CHI '25: Proceedings of the CHI Conference on Human Factors in Computing Systems 2025 1st Author

We explore the feasibility of active user-applied locomotion in virtual reality (VR) within in-car environments through a two-step study, by examining the effects of locomotion method on user experience in dynamic environments as well as evaluating contextual cues designed to mitigate sensory mismatch posed by vehicle movement. The first study evaluated five locomotion methods, identifying joystick-based navigation as the most suitable for in-car use due to its low physical demand and stability within the dynamic vehicle environment. The second study focused on designing and testing various contextual cues that translate vehicle movements into virtual effects, aiming to integrate sensory inputs from the vehicle without limiting the user’s freedom of movement. Along with results in which the implemented contextual cues effectively lowered motion sickness and increased presence, we conclude with a set of initial insights and design considerations into expanding the range of potential in-car VR applications by enabling active locomotion.

I Want to Break Free: Enabling User-Applied Active Locomotion in In-Car VR through Contextual Cues

Bocheon Gim, Seokhyun Hwang, Seongjun Kang, Gwangbin Kim, Dohyeon Yeo, Seungjun Kim

CHI '25: Proceedings of the CHI Conference on Human Factors in Computing Systems 2025 1st Author

We explore the feasibility of active user-applied locomotion in virtual reality (VR) within in-car environments through a two-step study, by examining the effects of locomotion method on user experience in dynamic environments as well as evaluating contextual cues designed to mitigate sensory mismatch posed by vehicle movement. The first study evaluated five locomotion methods, identifying joystick-based navigation as the most suitable for in-car use due to its low physical demand and stability within the dynamic vehicle environment. The second study focused on designing and testing various contextual cues that translate vehicle movements into virtual effects, aiming to integrate sensory inputs from the vehicle without limiting the user’s freedom of movement. Along with results in which the implemented contextual cues effectively lowered motion sickness and increased presence, we conclude with a set of initial insights and design considerations into expanding the range of potential in-car VR applications by enabling active locomotion.

2024

Curving the Virtual Route: Applying Redirected Steering Gains for Active Locomotion in In-Car VR

Bocheon Gim, Seongjun Kang, Gwangbin Kim, Dohyeon Yeo, Seokhyun Hwang, Seungjun Kim

CHI EA '24: Extended Abstracts of the CHI Conference on Human Factors in Computing Systems 2024 1st Author

This study examines the feasibility of user-applied active locomotion in In-Car Virtual Reality (VR), overcoming the passivity in mobility of previous In-Car VR experiences where the virtual movement was synchronized with the real movement of the car. We present the concept of virtual steering gains to quantify the magnitude of user-applied redirection from the real car's path. Through a user study where participants applied various levels of steering gains in an active virtual driving task, we assessed usability factors through measures of motion sickness, spatial presence, and overall acceptance. Results indicate a range of acceptable steering gains in which active locomotion improves spatial presence without significantly increasing motion sickness. Future works will attempt to further validate a steering gain threshold in which active locomotion in In-Car VR can be applicable.

Curving the Virtual Route: Applying Redirected Steering Gains for Active Locomotion in In-Car VR

Bocheon Gim, Seongjun Kang, Gwangbin Kim, Dohyeon Yeo, Seokhyun Hwang, Seungjun Kim

CHI EA '24: Extended Abstracts of the CHI Conference on Human Factors in Computing Systems 2024 1st Author

This study examines the feasibility of user-applied active locomotion in In-Car Virtual Reality (VR), overcoming the passivity in mobility of previous In-Car VR experiences where the virtual movement was synchronized with the real movement of the car. We present the concept of virtual steering gains to quantify the magnitude of user-applied redirection from the real car's path. Through a user study where participants applied various levels of steering gains in an active virtual driving task, we assessed usability factors through measures of motion sickness, spatial presence, and overall acceptance. Results indicate a range of acceptable steering gains in which active locomotion improves spatial presence without significantly increasing motion sickness. Future works will attempt to further validate a steering gain threshold in which active locomotion in In-Car VR can be applicable.